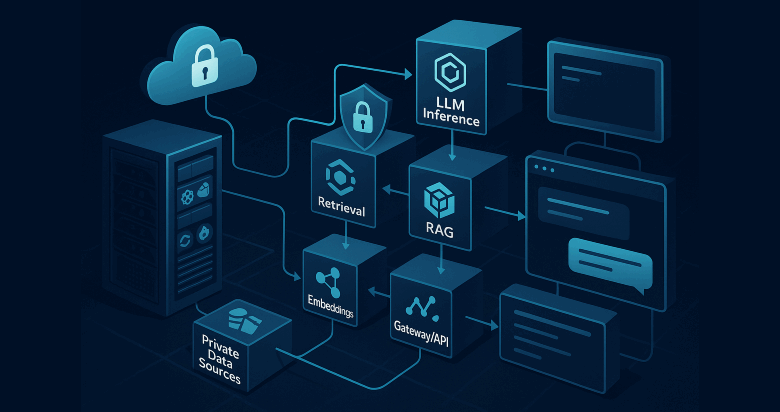

I’ve been tinkering with LiteLLM at home, and pairing it with my OpenWebUI setup has unlocked something surprisingly practical: real budgets I can enforce automatically so there are no surprise bills. LiteLLM gives me one endpoint for many providers, per-key rate limits that fit each person in my household, and a clean way to bring local models into the same flow. In this post I break down how it works, why it matters for home-brew projects or small teams, and how to deploy it with Docker, end to end. I’ll also show simple recipes for monthly caps, per-key tokens-per-minute or requests-per-minute, and routing that prefers cheap or local models first.

Open WebUI: A Pay As You Go, multi-model, family-friendly alternative to ChatGPT Plus

Open WebUI is an extensible, self-hosted interface for large language models that feels familiar if you have used ChatGPT, yet it gives you control over models, costs, and access. I have been thinking about Open WebUI as a self hosted alternative on a Pay As You Go model versus paying …