This article documents the decision to dismantle that architecture and replace it with a simpler, deterministic system built around cronjobs, webhooks, and reusable scripts generated with close AI assistance. By isolating intelligence to specific tasks rather than running it continuously, I reduced daily costs to under one dollar while significantly …

Agentic Repos: The new CI/CD is CI/CD + Tasks

CI/CD automated what happens after code is written. Agentic repos automate the work around code: triage, upgrades, tests, and refactors. The key shift is that the output is not chat text; it is a reviewable PR with diffs, commands run, evidence, and rollback notes. Done right, the value is leverage without surrendering control because humans stay the merge gate.

OpenClaw Beyond the Hype: Practical Use Cases and a Hands-On Ubuntu Install

AI assistants are everywhere right now, but most of them stop at conversation. OpenClaw takes a different path. It is a self hosted, autonomous AI assistant designed to actually do things across your system and the tools you already use. From managing messages and automating workflows to running scripts and …

OpenClaw: From Talking Bots to Acting Systems

OpenClaw is not another chatbot. It is an AI driven control layer designed to live inside real systems. Formerly known as ClawdBot, OpenClaw evolved from a conversational experiment into an event driven assistant that reacts to time, signals, and infrastructure. Built on cronjobs, webhooks, and an agent that reasons about intent rather than rules, OpenClaw coordinates workflows, monitors systems, and takes action across tools without constant human input. It is quiet when nothing matters, decisive when something does, and designed for production environments where automation needs judgment, not just scripts.

OpenDev Recap: Everything OpenAI Announced

OpenAI used its OpenDev stage on October 6, 2025 to push ChatGPT from a capable assistant into a platform you can build on. The headliners were apps that run directly inside ChatGPT via a new Apps SDK, AgentKit for building and shipping agentic workflows, general availability of Codex with a fresh SDK and Slack integration, and a strategic AMD partnership aimed at scaling compute to six gigawatts. This post breaks down what shipped, why it matters, and how developers can start building today.

One Dashboard To Rule Your Servers: Grafana + Prometheus for Proxmox, KVM, VPS and Dedicated Boxes

If you have a mix of Proxmox nodes and external servers, getting a clean, truthful view of CPU, memory and disk is strangely hard. Proxmox’s built-in charts blur the line between free, cached and used memory, which makes planning resources awkward. I also needed email alerts for low disk space and high CPU or memory after learning the hard way that a full disk can freeze VMs and turn recovery into a risky dance. Finally, I wanted all nodes in one place, not just Proxmox, but remote KVMs, VPSs and dedicated servers from various providers.

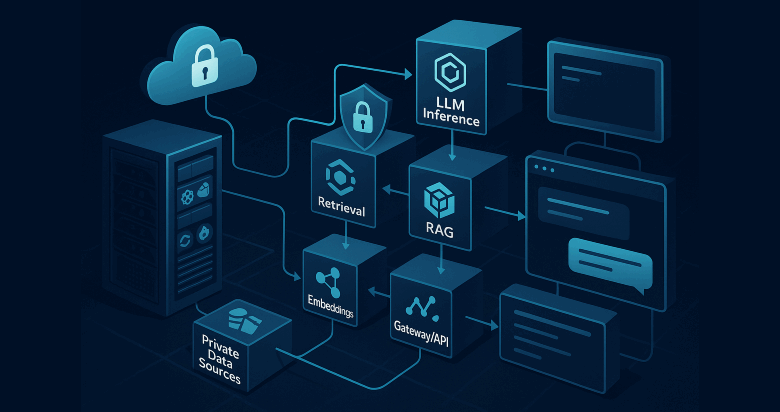

LiteLLM at Home: One Endpoint, Real Budgets, Zero Surprises

I’ve been tinkering with LiteLLM at home, and pairing it with my OpenWebUI setup has unlocked something surprisingly practical: real budgets I can enforce automatically so there are no surprise bills. LiteLLM gives me one endpoint for many providers, per-key rate limits that fit each person in my household, and a clean way to bring local models into the same flow. In this post I break down how it works, why it matters for home-brew projects or small teams, and how to deploy it with Docker, end to end. I’ll also show simple recipes for monthly caps, per-key tokens-per-minute or requests-per-minute, and routing that prefers cheap or local models first.

Open WebUI: A Pay As You Go, multi-model, family-friendly alternative to ChatGPT Plus

Open WebUI is an extensible, self-hosted interface for large language models that feels familiar if you have used ChatGPT, yet it gives you control over models, costs, and access. I have been thinking about Open WebUI as a self hosted alternative on a Pay As You Go model versus paying …

Mastering AI Prompt Writing

Writing effective prompts for AI is less about magical keywords and more about clear communication. Whether you’re creating documentation, training a colleague, or automating tasks, the way you frame your request to an AI determines the quality of the result. In this extended guide, we’ll go step-by-step through prompt structures, metaphors that make the concept click, workplace training scenarios, and a library of examples you can use immediately.

Proxmox for the HomeLab

Meet Proxmox VE (Virtual Environment), a free and open‑source virtualization platform that fuses full KVM-based virtualization with LXC containers into one seamless web interface. It’s popular among home lab enthusiasts and IT pros who want enterprise-grade tools without enterprise-level licensing fees.

This post takes a deep dive into what Proxmox is and how to set up a powerful home lab using it; from the basics of installation to more advanced configurations like VLANs, backups, and clustering.