Open WebUI is an extensible, self-hosted interface for large language models that feels familiar if you have used ChatGPT, yet it gives you control over models, costs, and access. I have been thinking about Open WebUI as a self hosted alternative on a Pay As You Go model versus paying 20 dollars each month for every user, which quickly adds up and does not make sense when at least one person in the group is a light user. In this post I explain how Open WebUI brings multiple models into one clean UI, how you can decide exactly which models are available to which users, how request limits can be set locally when needed, and where the rough edges still are, like memories and manual setup for web search and image generation. I close with a simple setup guide and a cost sanity check for a household of two to four.

Open WebUI started as a friendly face for local models, then grew into a full platform that can talk to Ollama and any OpenAI-compatible API. You can run it entirely offline with local models or point it at hosted models for usage based billing. The project has active docs, frequent releases, and a community that now claims more than 250 thousand users. If your goal is to replace per seat subscriptions with a single server you control, this is a compelling path to try.

What Open WebUI is

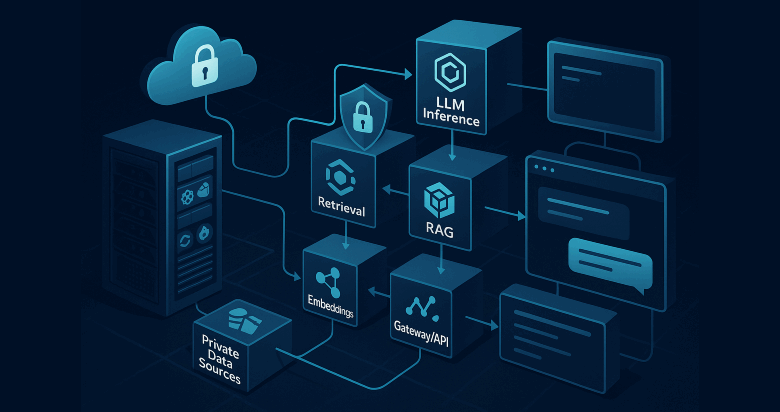

Open WebUI is a self-hosted, extensible chat interface for LLMs. It supports multiple backends, including Ollama for local models and any OpenAI-compatible server for hosted models. You can switch models inside a single chat interface, use Retrieval Augmented Generation, add function pipelines, and keep the whole thing on your own hardware if privacy is a priority.

ChatGPT Plus is priced per user per month. For a household of two to four people, that is 40 to 80 dollars every month if everyone needs Plus features. With Open WebUI, you can connect to usage based APIs or run local models where the marginal cost of an extra message is zero. That makes occasional use much cheaper while still letting a heavy user spend more when they actually need it.

Is there any alternative to self-hosting? Hosted alternatives like CheapGPT use pay as you go pricing with a small fee per request, so your total cost scales with usage. But, keep in mind of the dangers of singing up to websites that are receiving your data; and for that reason I DO NOT RECOMMEND CheapGPT as a good alternative.

Open WebUI has roles and permissions. The first account becomes an administrator, and you can configure what each user can access. That means you can expose a smaller set of safe, inexpensive models for kids and a wider set of advanced models for yourself, all without handing out the same credentials.

By default there are no artificial message caps. If you want guardrails, you can add them. The built in Pipelines framework includes a rate limit filter and conversation turn limits. Many people also pair Open WebUI with a LiteLLM proxy to enforce per user budgets, rate limits, and virtual keys. This gives you daily or monthly caps and lets you bypass limits for a particular user or model when needed.

Practical Use Cases

Family setup with mixed usage

Two adults and two kids share the same server. Kids can only see local models in the dropdown, which cost nothing to run. One adult is allowed to use a hosted reasoning model for research, with a budget of a few dollars each month. Everyone benefits from the same familiar chat UI.

Private research workstation

You keep a local model for quick drafting and pair it with a web search pipeline to bring in fresh links when you ask. RAG is available for your PDFs and notes. You can do this entirely offline until you toggle the search integration back on.

Maker’s sandbox

You add image generation via ComfyUI or AUTOMATIC1111 when you want it. It is not one click, but once connected you can generate images from inside chat.

Tradeoffs of Open WebUI

Memories are improving but not perfect

Open WebUI has an experimental Memory option in Settings and a growing set of community memory filters. People are actively proposing changes to make memory more reliable and selective. If you rely on the deeply integrated memory you get in polished hosted apps, expect to tinker.

Web search and image generation require manual setup

Search typically needs SearXNG or another API you host which can add to pricing and/or be complicated, and image generation needs a backend like ComfyUI or AUTOMATIC1111. The parts are documented but you do the wiring yourself.

Usage quotas are available, not turnkey

There are rate limit pipelines and community token tracking libraries. For strict per user spend you usually bring a proxy like LiteLLM. It works well once configured, although it is one more moving piece to learn.

Community is growing, which is great, and also noisy

The good news is there are many discussions, functions, and tutorials. The flip side is that some features live in issues or community repos before they land in the core product, so your path may involve a plugin or two. The visible growth and the official site’s user count are strong signs that the project is moving fast.

Conclusion

Open WebUI is not a one size fits all replacement for hosted assistants, yet it is an excellent middle path if you want a familiar chat experience, multiple models in one place, and real control over costs and access. I have been thinking about it exactly this way for households that do not want to spend 40 to 80 dollars every month when one or two people barely use the tool. The big advantage is a single interface with many models, granular user access that suits a family, and no arbitrary request limits unless you add them. The disadvantages are real but manageable, mostly around memories that are still maturing and integrations like search and image generation that you need to wire up. If you are comfortable with Docker and a config file, the payoff is a private and flexible assistant that adapts to how you actually use AI.